Landing page split testing is a powerful method to optimize website conversions by comparing two page versions. It involves directing traffic between variations to determine which performs best based on critical metrics, such as click-through rates, conversion rates, or user engagement. This method allows marketers to make data-driven decisions that reveal what truly resonates with visitors, whether an entirely new page design or a rearranged submission form.

Split testing offers a range of benefits that make it a vital tool in digital marketing. First, it provides clear, data-driven insights into what works best for engaging users and driving conversions, removing the guesswork from design and content decisions. Additionally, ongoing split tests can reveal shifting audience preferences over time, ensuring that pages remain relevant and competitive.

This article covers the fundamental aspects of landing page split testing, including the difference between split testing and A/B testing and split testing versus multivariate testing. It guides you through the process of creating a landing page split test effectively and recommends the best tools for managing and analyzing test results. Moreover, it highlights successful examples of landing page split testing to illustrate the potential impact of this approach on conversion rates.

Delve into this article to learn how split testing can elevate your conversion strategy. Discover the distinctions between various testing methods. Follow a step-by-step guide to setting up effective experiments. Explore top tools and inspiring success stories that reveal the undeniable impact of cutting-edge design.

What is a Landing Page Split Test?

A landing page split test (sometimes called an A/B test) is a structured experiment where website visitors are evenly divided between two page variations (50/50) to determine which version achieves higher conversions. This approach is a core technique in optimizing conversion rates.

Consistency refers to maintaining a stable testing environment, ensuring that all variables are managed so that any changes in performance can be attributed solely to the elements being tested. This involves standardizing factors such as the audience, time of day, and external influences that could bend results. Marketers can draw precise conclusions from their tests by keeping these variables constant. Control, on the other hand, involves the careful management of the test process itself. This means having a well-defined hypothesis, clearly understanding what constitutes a successful outcome, and systematically implementing tests to validate or invalidate that hypothesis. Without control, results can be misleading, resulting in decisions based on incomplete or erroneous data.

Together, these principles ensure that split testing is not just a trial-and-error process but a strategy to optimize landing pages. By adhering to consistency and control, marketers can more effectively enhance user experiences, boost conversion rates, and ultimately achieve better outcomes.

Start split testing your landing pages now and turn insights into higher conversions!

What is The Difference Between Split Testing and A/B Testing?

The difference between split testing and A/B testing is that split testing compares different page versions to evaluate significant design changes. In contrast, A/B testing focuses on minor tweaks to an existing page to optimize specific elements.

Split testing usually compares completely different versions of a page to see which one performs better. This is ideal for assessing significant changes, like redesigning a site or trying out a new layout. It allows businesses to understand what their audience prefers and how they interact with different designs.

A/B testing focuses on making small tweaks to an existing page. For example, you might change the color of a call-to-action button or rearrange the wording of a headline. A/B testing helps determine which minor adjustments lead to better results.

| Elements typically examined with split tests | Elements typically examined with A/B tests |

|---|---|

| design | CTA button |

| layout | headline |

| product page | copy |

| submission form | social proof elements |

It’s important to note that while all A/B tests can be considered a form of split testing, not every split test is an A/B test. Combining both methods can help businesses effectively refine their online presence and marketing efforts, leading to improved user experiences and higher conversion rates.

What is The Difference Between Split Testing and Multivariate Testing?

The difference between split testing and A/B testing lies in their focus: split testing compares entirely different versions of a page, while multivariate testing explores the interactions between various elements on a single page. This method is beneficial for examining multiple variables simultaneously. For example, you could test different headlines, images, and button colors to see how each combination impacts user behavior. This allows for a more detailed analysis of how various elements work together, which can lead to better optimization insights.

Using these methods together can help businesses refine their online strategies effectively, resulting in enhanced performance and engagement.

Build your landing page today and test with precision for winning results.

How to Create a Landing Page Split Test?

To create a landing page split test, concentrate on its objective, key metrics, time frame, traffic distribution, and thorough data analysis from the experiment. These elements make up a proper procedure that allows you to get the most out of the effort.

Creating a landing page split test is crucial for optimizing conversion rates, as it allows you to assess the most efficient elements of your page directly. By systematically testing different variations, you gain clear, data-backed insights into user preferences, leading to more effective page designs and ultimately driving higher engagement and conversions. This method removes guesswork, enabling continuous improvements that align with user behavior and market demands.

Landingi’s powerful platform further enhances this process, offering intuitive tools to build, run, and analyze split tests effortlessly. With its easy-to-use builder, customizable templates, and in-depth analytics, Landingi empowers marketers to quickly set up and monitor tests, making implementing changes based on real-time data simple. These capabilities streamline the entire testing journey, allowing you to maximize the impact of each landing page with confidence.

Build, test, and optimize landing pages that actually convert. Begin your journey today!

To set up a successful landing page split test, follow the core steps below to ensure accuracy and effectiveness.

1. Define your objective

Defining a clear objective is essential as it guides every decision in the split testing process. Pinpointing the specific metric you want to improve (click-through rate, form submissions, or sign-ups) will help you tailor each variant to achieve that goal. Without a defined objective, results can be difficult to interpret, making identifying what changes drive success challenging.

For example, if the goal is to increase form submissions, ensure that every aspect of the test – such as changes to the form layout, CTA color, or placement – is crafted to improve that specific outcome. Focusing on a measurable objective lets you track progress and make strategic adjustments based on accurate data.

2. Formulate a hypothesis

Formulating a hypothesis is a crucial step in landing page split testing because it provides a clear direction and purpose for the test. A well-structured hypothesis helps you define what you’re testing, why you’re testing it, and what specific outcome you expect. For example, a hypothesis like “Changing the product page layout will increase click-through rates by 10%” sets a clear objective that guides both the design of the test and the analysis of the results.

With a hypothesis, split tests avoid becoming random experiments with actionable insights, making it easier to understand why one version performed better. Moreover, having a hypothesis encourages learning.

This approach ensures that each test builds on the previous one, creating a continuous cycle of improvement that drives long-term success in landing page performance.

3. Create variants

Creating well-thought-out variants is critical to testing effectively. Each variant should focus on a single, impactful change, whether a headline, CTA, or image, allowing you to isolate elements influencing conversions. Avoid overloading variations with too many changes at once; simplicity here ensures that any performance shifts are due to the specific element being tested.

Adjusting a headline to emphasize a discount (e.g., “Get 20% Off Today!” vs. “Exclusive Savings Just for You!”) might reveal wording that captures visitor attention. By isolating specific changes in each variant, you ensure that the effect of each adjustment is clear and actionable.

4. Set up the test

Setting up the test correctly is crucial to ensure accurate and meaningful results. Ensure that each variant is set up to be displayed uniformly, maintaining a consistent design and layout across all devices and browsers. Establish a statistical significance threshold so that you gather enough data to make an informed decision. This consistency prevents biases arising from technical issues, ensuring the test accurately reflects user responses only to the changes you are testing.

For example, if you’re testing a new CTA color on your landing page, ensure that the color displays consistently across all browsers and devices. Inconsistencies, such as the button appearing correctly in Chrome but not in Safari, could distort the results and make it unclear if the color change genuinely impacts conversions.

5. Distribute traffic

Distributing traffic evenly between variants is essential for unbiased results. Ensure each version receives a comparable sample size to minimize random variation and achieve statistically significant outcomes. Consider splitting traffic evenly for larger audiences, while smaller samples may benefit from higher traffic percentages per variant for quicker insights.

An ecommerce site can split its traffic 50/50 between two different product page layouts. However, prioritizing a more significant portion of traffic for a test variation can speed up insights if the audience is smaller. This careful distribution ensures each variant receives enough exposure for an accurate comparison, making the results statistically valid.

6. Run the test

Running the test adequately is vital to capture user behavior accurately. Refrain from ending the test prematurely, as short testing periods may lead to inconclusive or misleading results. Let the test run until it reaches statistical significance, ensuring your findings are robust and representative of long-term trends.

Remember that the timing of your test can significantly influence the results and even undermine the purpose of testing itself. Many online businesses experience predictable peak and off-peak periods. For example, comparing e-commerce website traffic on Black Friday to any other insignificant day is unlikely to yield reliable, comparable data. Choosing consistent timeframes ensures a more accurate understanding of what works for your audience.

7. Analyze results

Analyzing test results carefully is essential to making data-driven decisions that enhance your landing page’s effectiveness. Begin by examining the primary metric associated with your objective, such as conversion, click-through, or bounce rates, to determine which variant performed best. Look for statistically significant differences, meaning results that are unlikely to be due to random chance, as this increases the reliability of your findings. Use tools like Landingi’s built-in analytics or Google Analytics to dig deeper into user interactions, breaking down data by device type, geographic location, or even time of day to uncover specific patterns.

For example, suppose a new CTA led to a 20% increase in sign-ups on desktop but showed no change on mobile. This insight can reveal unique user behaviors on different platforms, prompting further testing tailored to each device type. Reviewing secondary metrics, such as engagement time and scroll depth, is helpful as they can provide context to your main results and reveal unexpected user behavior. A thorough analysis confirms which variant succeeded and offers insights into audience preferences, guiding future testing and design decisions.

8. Implement changes

Implementing changes based on test findings improves your landing page’s performance. Roll out the winning variant and make it the new standard, or run additional tests on top-performing elements to optimize further. Each implemented change brings you closer to a landing page fully aligned with user preferences.

9. Keep a record of findings

Maintaining a record of each test’s findings is essential for long-term optimization. Documenting positive and negative changes help you build a valuable resource for future tests. This record enables you to identify patterns over time, refining your strategies and making informed adjustments more efficiently.

This enables businesses to make data-driven decisions, ultimately enhancing the user experience and boosting conversion rates. Consistent testing, analyzing, and refining landing pages extend beyond just improving their performance; they play a vital role in the broader effectiveness of digital marketing campaigns. By identifying what resonates with users, businesses can create more engaging and relevant experiences, ultimately driving higher conversion rates and improving return on investment (ROI).

3 successful examples of landing page split testing

Analyzing successful landing page examples provides valuable insights into the effectiveness of well-executed digital strategies. Marketers can learn the key elements that contribute to a landing page’s success by exploring real-life cases.

Understanding what works in these examples can inform your split testing, allowing you to refine your own landing pages based on proven practices. Look at the three selected examples below to discover the strategies that drive engagement and results.

1. Josera

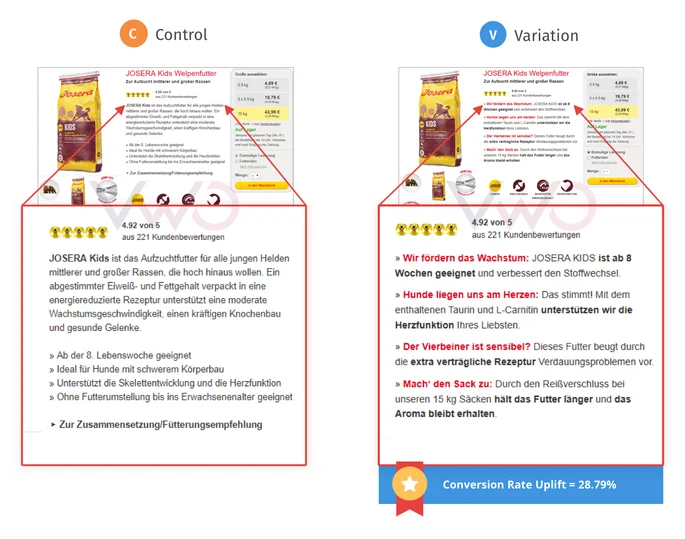

Our first split testing example centers around a modification to the product detail page, explicitly targeting users who were initially uninterested in making a purchase.

By analyzing user behavior and feedback, the team sought to understand which elements could be adjusted to capture interest more effectively. The analysis uncovered a worryingly high bounce rate on JOSERA’s product detail pages. An even more significant problem was the meager sales conversion rates. The team concluded that a complete remake of the product detail page would improve the situation. They came up with a variation with a distinctively more precise description compared to the actual product detail page.

The split test powered by the VWO platform engaged 2,400 users over 16 days. Traffic was evenly distributed between the two experiences, ensuring a fair comparison of the changes implemented. The variation recorded a staggering 28.79% increase in conversions.

2. Server Density

The second example comes from Server Density, a SaaS provider of hosting and website monitoring. Initially, their pricing was cost-based, but after shifting to a packaged, value-focused offer, they saw a substantial increase in overall revenue, hitting 114%.

This new model not only boosted earnings but also decreased free trial sign-ups from non-committed users. The example underscores the profound impact a thorough redesign can have on revenue. When a landing page is reimagined with user-centric elements, compelling visuals, and strategic CTAs, it doesn’t just look better; it performs better.

3. JellyTelly

The third example is JellyTelly, a television network targeting Christian families. Never Settle, a digital agency based in Denver, offered the broadcasting company simple yet powerful changes on the home page site. The idea behind the changes was to create a variation with fewer distractions.

The variation outperformed the original, driving 105% more visitors to the sign-up page and a 5% increase in total sign-ups for JellyTelly. The boost in visitors to the sign-up page was undoubtedly significant, but the team noted room for improvement, with conversions lower than expected.

It’s a perfect example of how one successful split test on a particular website doesn’t guarantee the same results elsewhere. Treat the retrieved results for deeper analysis that may lead to another, more refined experiment.

Can I Split-Test a Website?

Yes, you can split-test a website to optimize its performance. It allows you to compare two webpage versions to determine which performs better based on a specific metric, such as click-through rate, conversion rate, or user engagement. This method involves creating multiple page variants with different design, layout, content, and call-to-action elements.

Visitors are then randomly directed to one of these versions, and their interactions are analyzed to see if the version yields the best results. For example, a website might test two homepage designs– one with a bold CTA and the other with a subtle CTA – to identify the best solution.

Split testing offers valuable insights that can significantly improve user experience and business outcomes. Businesses can make data-driven decisions by continually testing different elements and tailoring their websites to match user preferences and behaviors. Ultimately, split testing is an essential tool for website optimization, helping to reveal features that resonate with users and driving measurable growth by eliminating guesswork from design choices.

How Do I Test Multiple Landing Pages?

To test multiple landing pages, you need to define the desired result and then create the variations with specific changes to achieve the objective. Establishing these initial points allows you to choose an adequate type of test. Take a look at three main types of landing page tests that are commonly used:

- Split testing: In this kind of testing, traffic is routed to two different landing pages with separate URLs. This technique is especially beneficial for evaluating the effects of major changes, such as a total redesign or the introduction of new value propositions.

- A/B testing: This technique involves comparing two variations of a page (Version A and Version B) to pick out the best-performing one. An example would be testing different colors for a call-to-action button to see which drives more clicks.

- Multivariate testing: This method assesses multiple elements, such as headlines, images, and call-to-action buttons, to find the most effective combination that enhances conversion rates.

By systematically experimenting with various elements, marketers can leverage data to inform their decisions, leading to more effective landing pages and improved conversion rates. This allows for the optimization of individual components and contributes to a more strategic understanding of user behavior and preferences, ultimately driving better results in digital marketing campaigns.

What is The Best Landing Page Split Testing Tool?

The best landing page split testing tool is Landingi, unquestionably a leading platform in digital marketing. It streamlines creating and launching tests, even for those with minimal technical experience. Users can easily duplicate landing pages, modify elements – like CTA buttons, headlines, images, or forms – and test these variations to see which version achieves better results.

One of Landingi’s strengths is its extensive library of customizable templates tailored for various industries, simplifying the design process while maintaining effectiveness.

The platform offers robust analytics tools, allowing users to track real-time conversion rates, bounce rates, and other key performance indicators. This information is crucial for making informed decisions about landing page optimization.

Compared to other tools like Unbounce or VWO, which also have strong testing capabilities, Landingi is often praised for its affordability and ease of use, making it accessible for businesses of all sizes. According to sources such as HubSpot and Digital Marketing Community, Landingi is frequently recommended for its comprehensive features and user-centric design, which facilitate a more efficient testing process.

Launch your landing page experiments today with Landingi’s easy-to-use tools.

What Are The Limitations of Landing Page Split Testing?

The limitations of landing page split testing are traffic requirements, time consumption, and cost implications. Take a look at our explicit description of the limitations below:

Traffic requirements

A landing page needs a substantial amount of traffic to achieve statistically significant results. Low-traffic pages may take a long time to gather enough data for reliable conclusions, making split testing impractical.

Time consumption

Setting up and running tests can be time-consuming. It often takes weeks to complete a test, particularly if the test variations are subtle or if the traffic is limited. Many marketers find that they run inconclusive tests, leading to frustration and wasted time.

Cost implications

If split testing involves paid traffic (like ads), costs can accumulate quickly. This can make it an expensive endeavor, especially if one version underperforms, resulting in lost revenue during the testing phase.

Complexity of implementation

Implementing split tests requires technical skills to track and measure results accurately. This is especially true when using multiple tracking mechanisms or integrating with existing systems – it can complicate the testing process.

Limited insight into user behavior

While split tests can show which version performs better, they often do not provide deep insights into why one version outperforms another (especially the tests run without a proper tool facilitating data collection). Understanding user behavior may require additional qualitative research methods.

Risk of misinterpretation

Statistical significance does not always translate to practical relevance. A result may be statistically significant but may not significantly improve conversion rates. That risk may appear if the objectives haven’t been thoroughly considered.

Understanding these limitations can help marketers strategize their split testing efforts more effectively, balancing the need for data-driven decisions with practical considerations.

Conclusion

Landing page split testing is essential for refining marketing strategies and maximizing conversion rates. By choosing the right approach and establishing clear key performance indicators (KPIs), marketers can effectively analyze the impact of different elements on user behavior. This process reveals valuable insights and empowers marketers to make informed, data-driven decisions that enhance overall campaign performance. With the importance of this practice already established, it’s clear that prioritizing split testing can elevate any marketing initiative.

Landingi offers a suite of features designed to enhance landing page split testing strategies, making it an ideal choice for marketers aiming to optimize conversion rates effectively. One of the standout aspects of Landingi is its user-friendly interface with a drag-and-drop editor. This functionality allows users to create and modify landing pages with ease, eliminating the need for technical skills. As a result, marketers can quickly set up multiple versions of landing pages for split testing, significantly reducing the time required to launch tests.

The platform supports multivariate testing, enabling marketers to assess multiple elements simultaneously. This capability offers more profound insights into how different combinations of elements interact and influence conversion rates. By utilizing these features, Landingi empowers marketers to enhance their landing page split testing strategies, ultimately leading to improved conversion rates and more effective digital marketing campaigns.

The time to hesitate is through – try Landingi for free and experience its robust capabilities in elevating your page results!